General concerns

Brief history of GUI automation

The myth about coordinate based test automation

Everyone probably has heard the myths about coordinate based test automation. This derives from a time where the test automation tool vendors sold support for each technology separately and it was troublesome to get the support working for each technology. The fallback for these tools was using coordinates within the closest recognizable container object (generally the application window object if the technology support wasn't properly configured) for element identification. Everyone that was around at that era has seen this type of fallback solution, but it was never how the tools were meant to work.

If you ever has encountered any coordinate based test automation it means someone failed at using the tool correctly.

A plenitude of tools

Up until about 2000 the number of test automation tool vendors were few and all were proprietary. During the open source movement in the early 2000's there was an explosion of test automation tool count.

Nowadays most tools used are open source tools that are free of license cost to use (all tools have a cost of using them, but hopefully the value of using them is greater than the cost) due to modern day multi-tier environments that would be costly to administer if license fees were involved.

Tool types

Enterprise solutions

In organizations with blue personality type you may still see the enterprise test automation tools like Microfocus UFT/QTP, Worksoft Certify or similar.

These are quite capable tools that support a myriad of different technologies.

These tools never seem to be perfect for any specific technological environment but has a similar approach to do test automation regardless of technology.

This category of tools often target employees with limited technical skills - providing a lot of features shifting the learning to specific tool knowledge rather than programatic skills or technological insights.

Programatic tools

Tool count vise the programatically based tools far outnumber any other type of tool. Many of these tools target very specific environments.

For example there are specific tools targeting React, for Angular, and for Vue.

The life time of these tools are as limited as the frameworks themselves, since they are intimitely associated with the development tools they support.

Some of the programatic tools include a GUI to ease automation. It could for example be tools like Selenium IDE to identify usable XPaths, or GUI Spy tools to understand the structure and properties of a GUI.

Scriptless tools

For decades scriptless test automation tools has been marketed, and the last couple of years really useful tools in this category has come up as actual usable alternatives to scripted tools. This tool category for example include tools like Ranorex, or Panaya.

Image recognition based tools

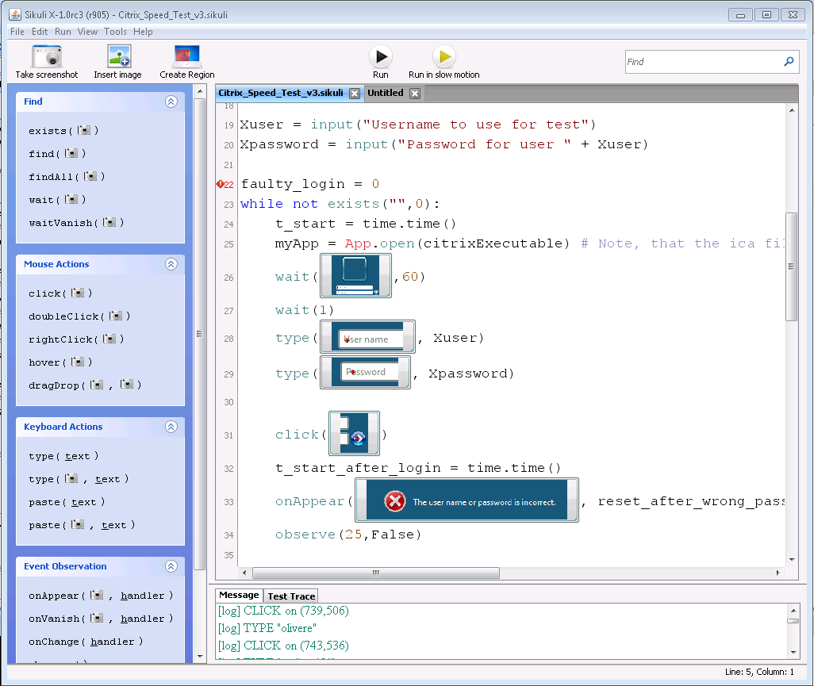

Tools like EyeAutomate, JAutomate, EggPlant, and Sikuli interact with the system through a series of partial screenshot matches rather than on properties of the elements in a system.

At first thought this seem a bit rigid since it must be dependent of screen resolution, color depth of display and so forth. However most of these tools use clever mechanisms to overcome these risks.

For example the tolerance levels may be settable. The image may be vectorized and converted to grayscale at runtime - thus making the execution way more robust.

This type of automation is very demoable and the scripts are very intutive.

The main usage for these types of tools are for systems where you have very limited contact with the developers and very lite mandate to influce the test system.

Communication and common commitment always wins over special technical solutions, but in the case you need to be certain something works that include GUI tests and you have no means of securing long term testability,

these types of tools may come handy.

AI based tools

The most recent addon to the test automation flora are the AI (Artificial Intelligence) based tools. There are a few different categories of these tools.

Tools that uses AI for element identification

A part from using normal element properties for identification of elements to interact with these tools also stores all runtime properties of the element when identified.

If the element cannot be identified at runtime for some reason an algoritm to identify a relevant candidate for the intended element is invoked based on the stored data.

Some of these tools also take the screen position of the element into account.

Examples of properties used for basis of recognition:

- Text

- Element type

- Atttributes (id, value, name, class, and so forth)

- Size

- Position

- Parent element

- Label

- Listeners

- Child elements

This approach has the potential to significantly reduce maintenance cost since many of the deviations derived from GUI layot changes has the ability to self-heal. This category of changes is common during implementation of new features, and less common as the system under test become more developed.

Web based testing

Web based test automation tools either tend to disregard the browser completely, running its own web kit, or interact with the browser by either browser API commands (like for example Selenium), or by injecting Javascripts on the page (like Jasmine).

Rich clients

Nowadays the application GUI of most applications are implemented to be run in a web browser. Web based systems have several advantages, like; all clients being on the same version at all times, lightweight client footprint, limited compatiblity issues depending on platform.

There still are a lot of heavy clients around from the era where client/server architecture was nessesary for systems to be usable. At times there are still arguments for local clients aswell.

Testing rich clients is something of a beast. The number of different technologies/platforms/frameworks are huge.

Examples of common frameworks out there

- Java AWT/Spring

- WPF (Windows Presentation Foundation)

- Windows Forms

- Oracle Forms

- Progress OpenEdge

Testing with the JVM - Java Virtual Machine

Java based rich clients come with an extra obstacle. The JVM (Java Virtual Machine) has a very locked down architecture.

If a program is started in a JVM it takes a lot of hacking to break into that JVM to be able to interact with the program.

One way around this problem is implementing a "Child first classloader" in your test module, and then load the application under test into this classloader.

This way the application under test runs independently from the test code, while the test code has full access to the application under test.

With some reflection you might even alter the application at runtime if needed, making private classes and methods public for interaction.

You should be aware that if you tamper with the system under test in this way the test results might be affected too, leaving you with risks still in place.

Rich client structure

Anonymous classes and methods

Structuring GUI based test automation

Page objects

Specification by Example and Gherkin (e.g. Cucumber/Specflow)

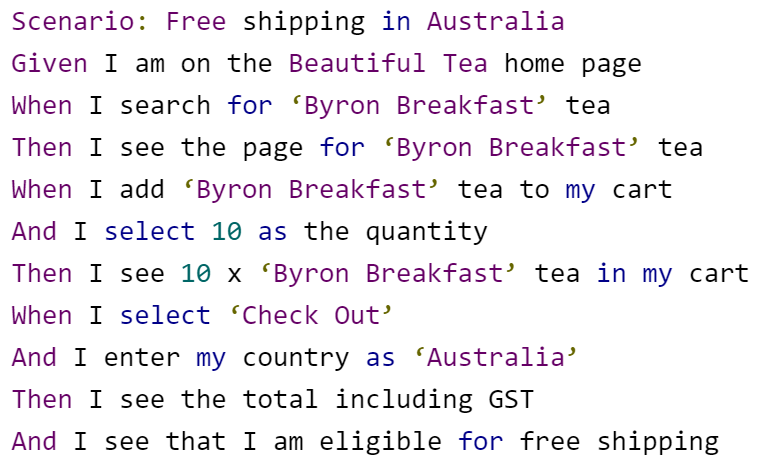

One way of getting test automation easier to understand, and that help with maintenance of script by introducing some structure, is a structured syntax. The most popular solution for this the last decade has been the Gherkin syntax.

While writing the expectations on the system under test in a GIVEN, WHEN, THEN keyword syntax it's pure code mechanics to map code sections to phrases.

Through the Gherkin syntax something called Living Documentation is achieved. This means that if the requriement is updated, the corresponding test automation is updated too - or at least the test automation staff get a notification the test automation need attention.

Navigation graphs

Page objects introduces an abstraction to expose behavior rather than the system itself - making it easier to cope with changes to the system under test.

If the workflow through the system under test is changed, like if a wizard is changed, a poorly implemented test system will end up with a lot of test automation that needs updating.

One way this could be achieved is keeping each test really short, thus introducing graphs over how navigation can be performed through the system, having each method making sure it is in

the right node in the graph to do what it is supposed to do, and if not to make sure it is in the parent graph node in the navigational graph until it can go forward again.

Navigation mechanisms in GUI test automation is typically introduced when the number of tests increase, to overcome overwhelming maintenance.

Test execution environments for GUI testing

One challenge with GUI based test automation is that often the tests require a computer with an un-locked desktop to execute.

Many organizations are rightfully reluctant to have that kind of computers standing around in the environment.

To overcome this potential obstacle many different strategies could be applied, depending on the circumstances.

Locked-in computers

Sometimes the most time and cost-efficient approoach is to simply bring out old discarded computers, disabling the screeensaver and lock-screen and then locking them into any compartment that is deemed safe enough. Any access to this computer is performed through Remote Desktop/SSH.

There are a few more obstacles with this setup. Often the computer has to be moved between GPOs (Group Policies in the AD), and it's not uncommon that a new GPO, without weekly restart, without screen-lock, and so forth has to be ordered extra.

Headless browser execution

Running web based tests in a headless browser means that you will see no browser window being displayed during execution. The fetching of server resources, execution of javascripts, and (when nessesary; rendering the page) is all performed invisibly.

Browser compatibility issues used to be a big challenge in web testing. Better development frameworks and less diverse web browsers has taken some of the hassle away from this.

Over time the browser divergence has become less and less apparent. Nowadays most browsers use the same web kit, meaning they have the same rendering engine, the same HTTP client and the same javascript engine.

This makes them less likely to diverge in behavior.

Headless browsers include HtmlUnit, PhantomJS, and Chrome Headless.

Nowadays it's probably unnessesary to run any other tool but Chrome since this is the most faithful way of executing tests headless.

Selenium Grid

Browserstack or other cross-browser-testing tools and services

If the tests are running through the Selenium-family tools there are external services for test automation execution.

The number of tools and services from this range from locally installed tools enabling running many versions of the same web browser concurrently on the same computer through segmentation, to services and emulators.

Running through an emulator

Sometimes it's possible to resort to running with an emulator. Many emulators keep running with no regard to the restrictions of the host OS. This makes it possible to run applications on a VirtualBox OS, or Android Emulator from a locked host OS.

Executing on session 0

By wrapping the test execution as a Windows service the appropriate tools will run even after restart. The Windows service may be set to execute as any user, and there is a checkbox to enable the service to interact with the desktop.

By doing this you will get a test automation that doesn't need any user to be logged in to the desktop environment to execute scripts at all.

One limitation is that Windows Forms based screenshots end up black.