Automatic unit testing is a safety harness that help keep technical depth at bay.

Nowadays the old term unit testing often feel mal-placed since the developers create tests for far more than only the individual classes/methods of the system. It's often more relevant to talk about developer tests, and even that feel like an un-nessesary cathegorization in a highly functioning agile team.

However, for the purpose of this text, all types of test ran only to test code is considered a unit test. In contrast the system- and acceptance testers often focus on verifying business workflows and infrastructure risks rather than the code.

Test runners inlude e.g. JUnit, MS Test, NUnit, zUnit, and many more. Each programming language has an own set of tools to use.

Some concepts are shared between all unit test runners. There are always possibilities to ignore tests at execution time without having to remove them, there are possibilities to set timeouts, to run specific shared code parts prior to each test and much more. Some of the most used concepts are highlihted below.

Unit tests rely on Asserts for verifications. There are may types of asserts. All of them halt further test execution upon failed verifications.

Another type of unit test check is testing for expected Exceptions thrown by the executed code. Different unit test runners handle this differently.

Unit test frameworks ignoledge the fact that you may want to run specific code before or after each test, and possibly even before or after the test class is executed at all.

These routines are called "Setup" and "Teardown".

The @Ignore annotation suppresses this test from execution at runtime.

The Ignore attribute/annotation could generally be used for both whole classes as well as for individual tests.

public class EmployeeApiTest extends ApiTest(){

private HttpClient httpClient;

@BeforeClass

public static void classSetup(){

MockedIntegrations.start();

}

@Before

public void testSetup(){

httpClient = new HttpClient(new ConsoleLogger());

}

@After

public void testTeardown(){

httpClient.dispose();

}

@AfterClass

public static void classTeardown(){

MockedIntegrations.stop();

new ZephyrConnection().pushResults();

}

@Test

public void postingNullShouldReturnBadRequest(){

httpClient

.send("POST", baseUrl + "/employee", null)

.verifyResponse()

.statusCode(400);

}

@Ignore

@Test

public void getWithoutAcceptHeaderShouldReturnJson(){

httpClient

.send("GET", baseUrl + "/employee")

.verifyResponse()

.bodyIsJson()

.statusCode(200);

}

@Test

public void putingNullShouldReturnBadRequest(){

httpClient

.send("PUT", baseUrl + "/employee", null)

.verifyResponse()

.statusCode(400);

}

}

Same example but for MS Test

[TestClass]

public class ControlerTests

{

private HttpClient _httpClient;

[ClassInitialize]

public static void ClassSetup()

{

MockedIntegrations.start();

}

[ClassCleanup]

public static void ClassTeardown()

{

MockedIntegrations.stop();

new ZephyrConnection().pushResults();

}

[TestInitialize]

public void Setup()

{

_httpClient = new HttpClient(new ConsoleLogger());

}

[TestCleanup]

public void Teardown()

{

_httpClient.dispose();

}

[TestMethod]

public void postingNullShouldReturnBadRequest()

{

_httpClient

.send("POST", baseUrl + "/employee", null)

.verifyResponse()

.statusCode(400);

}

[TestMethod]

[Ignore]

public void GetWithoutAcceptHeaderShouldReturnJson()

{

_httpClient

.send("GET", baseUrl + "/employee")

.verifyResponse()

.bodyIsJson()

.statusCode(200);

}

[TestMethod]

public void PutingNullShouldReturnBadRequest()

{

_httpClient

.send("PUT", baseUrl + "/employee", null)

.verifyResponse()

.statusCode(400);

}

}

Disregard subtle differences in naming convention and bracket usage between Java and C# for now.

One type of developer test is to test the response time of end-points in an environment with the whole system running without internal fakes. One way of doing this is measuring the execution time of the test method. Another is setting the timeout of a test explicitly.

@Test(timeout=500) //500 miliseconds

public void endpointResponseTimeShouldBeLessThan500ms(){

httpClient

.send("GET", baseUrl + "/api/v2/employees")

.verifyResponse()

.statusCode(200);

}

@Test

public void endpointResponseTimeShouldBeLessThan500ms(){

long startTime = System.currentTimeInMilliseconds();

httpClient

.send("GET", baseUrl + "/api/v2/employees")

.verifyResponse()

.statusCode(200);

Assert.assertTrue(

"Request took " + (System.currentTimeInMilliseconds() - startTime), //This line is message upon failed assertion

System.currentTimeInMilliseconds() - startTime < 500); //Validation

}

For tests including asynchronous requests, always make sure the request finishes since the test might finish before the tread has completed.

For MS Test:

[TestMethod]

[Timeout(500)] //500 milliseconds

public void GetWithoutAcceptHeaderShouldReturnJson()

{

_httpClient

.send("GET", baseUrl + "/employee")

.verifyResponse()

.bodyIsJson()

.statusCode(200);

}

//Other approach:

[TestMethod]

[Timeout(500)] //500 milliseconds

public void GetWithoutAcceptHeaderShouldReturnJson()

{

var timer = Stopwatch.StartNew(); //Start new timer

_httpClient

.send("GET", baseUrl + "/employee")

.verifyResponse()

.bodyIsJson()

.statusCode(200);

Assert.IsTrue(

timer.ElapsedMilliseconds < 500, //Timer validation

"Request took " + timer.ElapsedMilliseconds); //This line is message upon failed assertion

}

Similar approaches can be used for MS Test, NUnit, or other commmon runners.

Often you want to run similar tests but with different data. To avoid code duplication and enhance code readability the data-driven unit tests are suitable.

Data-driven tests work different on different platforms, but mostly they rely on annotation/attributes that hold the parameters for the tests, and the test is ran once for each data row.

Unless your architecture relies on queueing (like MQ) you might want to test concurrency of components.

The most common way to test concurrency in unit tests is to extend the test runner to a custom runner that identifies test methods in the test class but execute them in parallel threads through a thread pool. This is often combined with execution duration checks.

One approach is to run the test once in a single thread first to make sure the system is up and running and any caches are populated for just test results. Then running a timed execution in one single thread, and then another execution with a few (about 5?) threads and then checking that the single thread run doesn't take significantly longer than the multi-thread run.

Unit tests should be able to be ran from the IDE directly for ease of debugging, but also upon check-in of code on the continuous integration server. This enables an extra check of dependencies and configuration and reduces the works-on-my-machine problems.

All unit test runners support execution from command line interface (CLI). Some require extra plugin for this, but they all work from command line.

All CI/CD engines, like Azure DevOps, Jenkins, TeamCity and more has specific plugins for the most well known unit test runners. These can be configured in the build jobs.

For any test runner that is not supported by CI server plugins there is always the CLI command option. Running unit test in the CI/CD pipeline should never pose a problem.

Code project tools like maven or DotNet.exe/MSBuild.exe also can run the unit tests out of the box. The maven plugin for this is called Surefire.

Most unit test frameworks are horrible at logging if you approach them from a system tester perspective. However, given that anyone reading the tests and the test outcome probably has access to an IDE with access to debugging tools this is not such a big problem since you can step through the tests to identify what happens. Unit level tests are generally testing only the code of the system. Hence they are less likely for intermittent problems of being flaky. This makes it easy to re-test and finding the same problem.

System level testing generally aims at also finding infrastructure problems as well as code problems and that makes them more likely to stumble upon intermittent failures that need extensive logging to be understood.

Using common logging frameworks in unit testing should be done with some consideration, at least if your test classes are not using the same dependencies as your application under test. Sometimes you end up with dependency problems with different versions of the same logging dependency within the same runtime environment.

A lot of 3rd party tools include import mechanisms for the common output formats of common runners to display results from testing. Jenkins, Azure DevOps, TeamCity and many more easily uses this format, and there are plugins for Jira and other tools to use this format too.

Some tools, like MS Test and NUnit provide out-of-the-box integrations with Azure DevOps and TFS. Other tools provide parsers for test output files to propagate the test results to different tools.

Even on the test management tool side many tools come with importers or polling plugins for test results in common result formats.

The most common metrics for unit testing are code coverage and failure rate. More mature teams also include metrics like:

When testing a system you need to use other components than the system under test is made up from. You need mocking frameworks, HTTP clients, JSON parsers, testing frameworks and other components. Different development environements handle the dependency scope differently, but they all support this.

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

<scope>test</scope> <!-- Testing scope dependency only-->

</dependency>

</dependencies>

The scope parameter tells maven that this dependency only is used for testing.

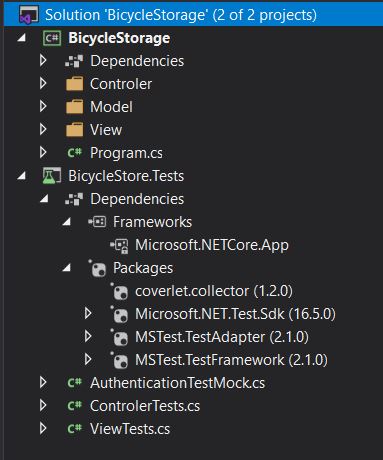

In C# (.NET/Net Core) the dependency management system is called nuget. The scope mechanism of nuget is per-code-project. The general way of using testing scope dependencies in the Microsoft world is creating a specific test project in your code solution. This way the testing project may use dependencies that are different from the ones in the system under test.

It's far easier to create stable unit tests if the system under test is developed with dependency injection/inversion of control. This makes it possible to replace specific code component with fakes at testing time. It could be that you want to test something but the login solution is to complex and rigid to make testing feasable. If the login functionality is tested elsewhere it makes sense to replace the login mechanisms when testing system internal code that should work once logged in. Using dependency injection you just implement a replacement login component for unit testing, and there are mocking frameworks to help you with this.

As Martin Fowler puts it:

Three different approaches to achieve the same testing:

@Test //A mock is a light-weight replacement of the DB object.

public void serviceManagerUserNameQueryShouldReturnNameFromDb(){

//Get mocked object

DbManager mockedDb = Mockito.mock(DbManager.class);

//Setup behavior

Mockito

.when(mockedDb.query("SELECT Name FROM Users WHERE Id=1"))

.thenReturn("Testus Testson");

//Perform assertion

Assert.assertTrue(new ServiceManager(mockedDb)

.getNameForUserId(1).equals("Testus Testson"));

}

@Test //Replacing the actual functionality with something else you have control over

public void serviceManagerUserNameQueryShouldReturnNameFromStubbedDb(){

//Setup replacement DB

InMemoryDb stubbedDb = new InMemoryDb();

stubbedDb.executeCommand("CREATE DATABASE testDb");

stubbedDb.executeCommand("CREATE TABLE Users (Id int, Name varchar(255))");

stubbedDb.executeCommand("INSERT INTO Users (Id, Name) VALUES (1, 'Testus Testson')");

DbManager stubbedDbManager = new DbManager(stubbedDb);

//Perform assertion

Assert.assertTrue(new ServiceManager(stubbedDbManager)

.getNameForUserId(1).equals("Testus Testson"));

}

//Implementing a substitute class

public class FakeDb extends DbManager {

public FakeDb(){

//This is where the 'super();' statement probably would trigger the database startup of the original DbManager, but we supress that.

}

@Override

public String query(String sql){

return "Testus Testson";

}

}

@Test

public void serviceManagerUserNameQueryShouldReturnNameFromFakedDb(){

//Get faked DB

DbManager fakedDb = new FakeDb();

//Perform assertion

Assert.assertTrue(new ServiceManager(fakedDb).getNameForUserId(1).equals("Testus Testson"));

}

Of course everything is a lot easier if proper dependency injection provide interface abstractions to functionality. It's always important to separate what to do and how it's done to be able to easily substitute components.

End-point testing could be considered a component test, or a unit test, or a system level test. It depends on how much of the system is running in order to execute the test. If the test is performed against an in-memory database it's not a system test.

Many developers take the time to implement end-point tests.