The evolving test automation landscape

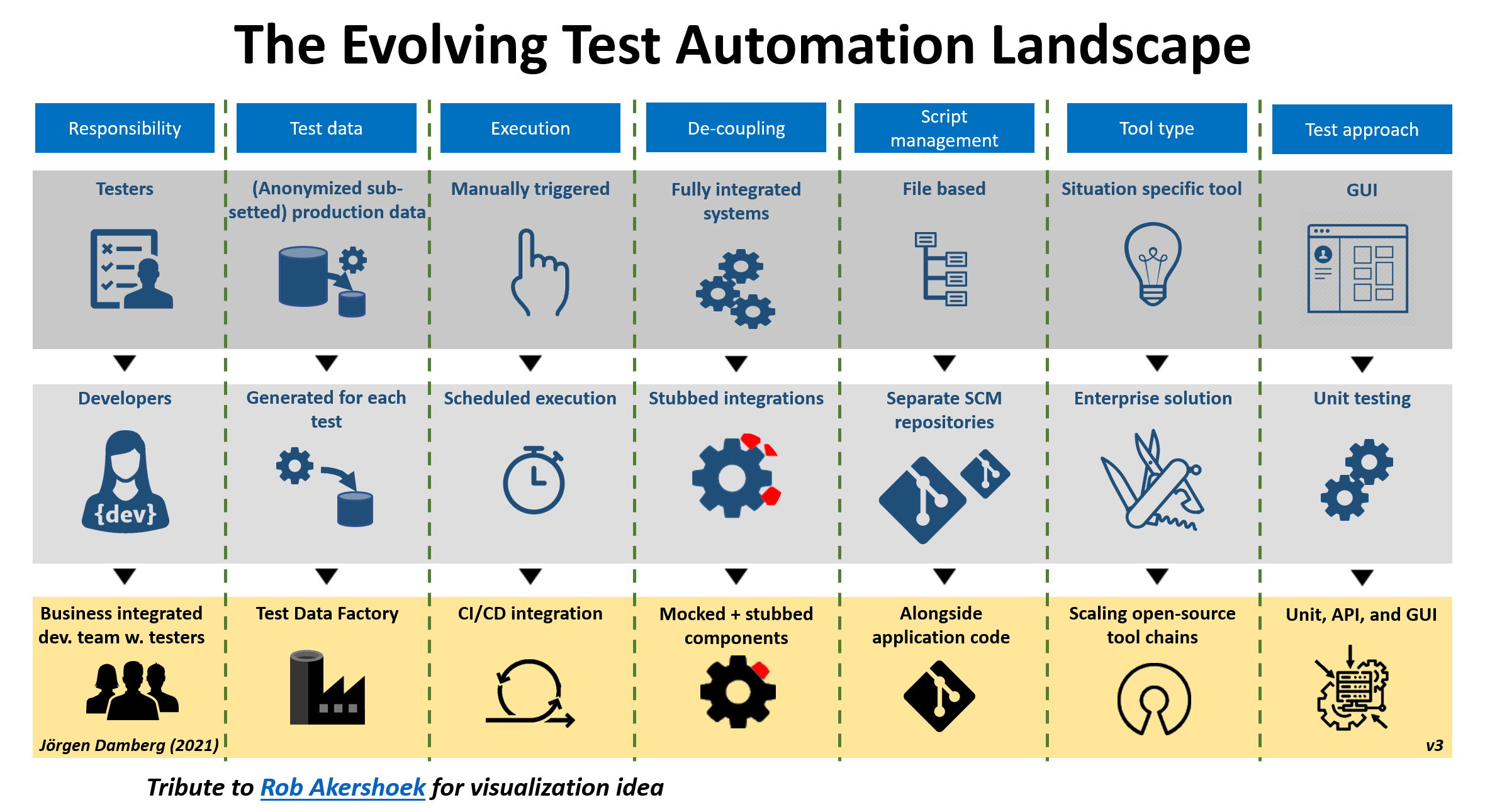

(2021-03-30)Inspired by Rob Akershoek and his image of The Evolving Digital Delivery Model I made a similar general chart to describe test automation progress as organizations evolve.

The chart below tries to depict how many organizations evolve with their test automation efforts.

It's not meant to be a guideline or roadmap, but something to guide discussions about test automation.

Insights for this chart are derived from numerous test automation assignments, many of which has been on strategic level.

The same experiences have proven that there are a lot of different circumstances that would make this chart partially debatable.

For example, if the system under test is a SaaS system and you still find it crucial enough for your business to implement test automation for it you would still likely find it problematic to version control your test automation code with the code for the system under test.

Another example could be if the goal of the test is to make sure business processes can be performed end-to-end through several systems, it could make sense using some method for identifying data rather than testing with constructed data.

For the most part this chart still makes sense. It's not something new or visionary, but hopefully a relevant documentation about how test automation usually progresses in an organization as it matures around the subject. The background of-course being the factors high-lighted by Rob Akershoek.

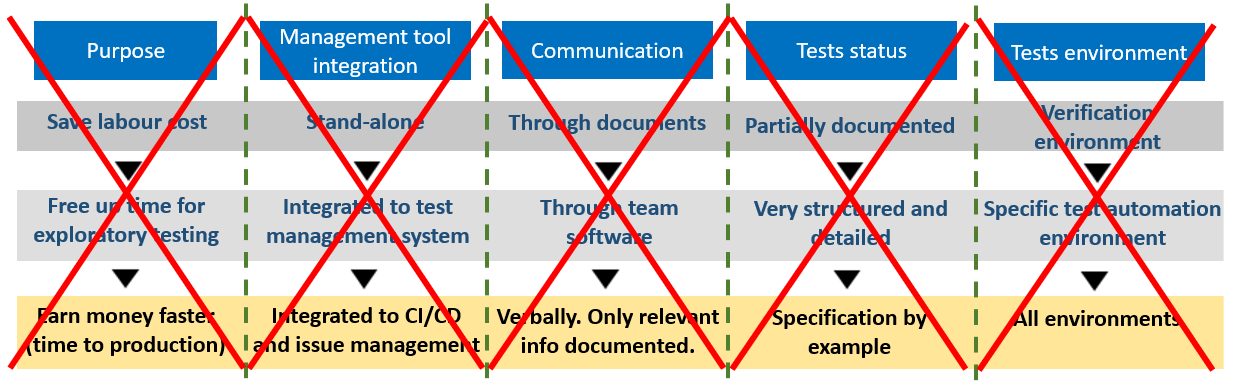

What's been left out of this chart, and why?

In any attempt to describe a complex reality, as with test automation, you have to leave things out. For example, in the chart above the following categories could have been included but are left out.

Purpose

The test automation purpose is of-course one of the most relevant aspects of test automation, but it has intentionally been left out. The reason is there are a lot of reasons for test automation initiatives, and many of these are valid. I've heard valid reasons like:

- We don't want our valuable testers to resign, and they are pushing for automation

- We need faster time to market

- We want to prepare for future low-key development, but keep a well-tested system

- We're increasing release frequency and don't have time for manual regression testing

- End-users are frustrated with our bad deliveries, so we are reluctant to have them test. We need to make sure the system always works.

- We don't want to continue developing on a broken system. We need to make sure it works for efficiency.

Management tool integration

For a lot of reasons, it often makes sense to integrate the test automation to issue tracking systems, CI/CD-pipeline tools, test management systems, requirement systems, change process tracking tools, version control systems, and so forth.

The circumstances for when what type of integration is relevant depend on the tools used, the organizational setup and many other factors. Since it is such a complex matter it has been left out.

Communication and Test status

The communication culture is one of the most relevant things for the test automation readiness. However, there are no natural flow of maturity that correlates to the test automation effort in any foreseeable way.

It would be tempting to think that it's better with less documentation, but then again, the tests need to be documented in a way so that even a computer can execute them. There are mechanisms like Specification-by-example to enable "living documentation", but even these come with a lot of annoying limitations and are in no way any silver bullet. In most implementations I've seen it's merely an abstraction to help with test automation code structuring.

Many times, there are also compliance requirements that affect how test automation is implemented, and those rarely lead to less documentation - but many times a test automation can help automating the documentation process.

Test environment

The test environment seem obvious to include, but looking into the matter, the relevant aspekts of Test environments for test automation is about system integrations, mandate over the test environment, and test data. Those aspects are already included in the larger picture above.

Recommended further reading

Test automation is a complex issue. Naturally there are a lot of relevant context dependent issues left out. For a more thorough walk-through of test automation concerns, please view:

- General test automation concerns

- Test automation assessment checklist

- API test automation

- GUI test automation

- Unit testing introduction

- Various created test automation resources